Fit vs. predictive power of statistical models

February 29, 2008 – 8:42 pmI had an interesting conversation yesterday with INSTAAR post-doc and statistical modeling whiz Chris Randin. We were discussing the relative importance of model fit and model predictive power in multivariate statistical models. Basically, I was arguing that we should optimize our models for predictive power and not for fit, especially because you’re probably going to overfit anyway. Chris countered convincingly that optimizing for fit and optimizing for predictive power give you different kinds of information.

To back up and give an example, say you have a response variable like nitrogen dioxide pollution levels across a landscape and you have a bunch of other predictor variables like temperature, moisture, vegetation greenness, and human population across the same landscape. You want to figure out interesting stuff about the relations between these predictor variables and the response variable. This is an increasingly common situation in our increasingly data-saturated world.

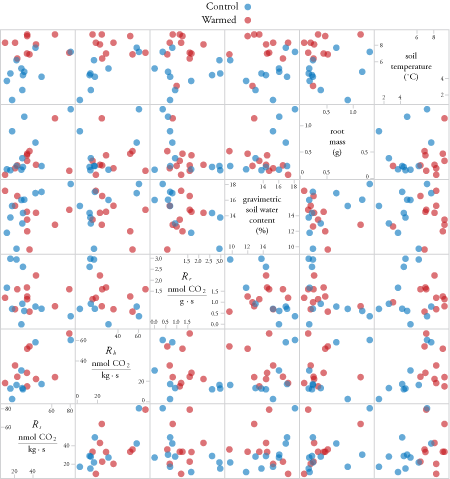

One thing you could figure out is the strength of the correlations between individual predictive variables and the response variable (nitrogen dioxide levels) using something like a scatterplot matrix like this one (just an example):

So, you fit one predictor to one response. Parameters are used to connect your predictors with the response and the accuracy of the match is your fit (r2). The slope and intercept coefficients are readily interpretable (good old y = mx + b).

It gets more complicated though when you start using multiple predictors. The parameters that are fit in the model are less easy to understand and the best fit will always improve when you add more terms to the model. The model with all variables and all of their interactions will always give the best fit (highest r2 or whatever other measure you are using). But statisticians have thought of this of course and so you can penalize the model for having additional terms, though I am a little skeptical of the techniques used to penalize because the magnitude of the penalty seems a little arbitrary (maybe I just don’t understand the genius behind the techniques though). Stepwise regression is a technique for going through a lot of potential model formulations with different variable combinations and deciding which one is best, though again, I am skeptical of the criteria for variable inclusion that go into stepwise-type procedures as are others. Most statistical modeling books have a very sad chapter that talks about how hard this process is and all of the heinous pitfalls involved.

But what if we define the best model as the one that has the best predictive power? Then the model selection has a really clear criterion. There are actually a lot of great techniques such as cross-validation and bootstrapping that allow you to test your model’s predictive power in really neat and innovative ways. We could test a whole lot of models using these tests of predictive power and get a printout of how well each model does using each of the different tests. I don’t think this approach is done very often, but if it’s prediction that you are interested in, then this may be the best way to go. Chris is right though that there may be other aspects of your data that might be better explored by optimizing for fit. I’ll probably have more to say on this as I do more of my own models with the data from my study site.

3 Responses to “Fit vs. predictive power of statistical models”

When I think about models in the context of science, I want them to fit! A model that can simulate observed data well can help us better understand the underlying mechanism of how things work. Isn’t that what we want in science, better understanding of the natural world? Models with predictive power are good for policy and economics. But that doesn’t mean predictive models shouldn’t be tested and developed for scientific purposes, they help sell the science to our potential sponsors and make scientists prophets for the public. Our crystal ball. Bwahahaha~!

By Brian Seok on Mar 11, 2008

Brian, I think that your comment points out something really important. The decision between these types of approaches to data is a philosophical matter: it’s the epistemology of statistics that is the hard part. Do we want “underlying mechanism” or “predictive power” and are either of those even achievable? Once I decide what I want to know, then it becomes easier because it’s a mathematical issue of picking an appropriate algorithm. In other words, knowing what the right question is and whether that question is answerable can be harder than answering it. I’d like to see statisticians address this type of thing more often, perhaps with help from the epistemology experts in the philosophy department.

By Anthony on Mar 20, 2008

It also seem to me that overfitting might seemingly improve relations in the small space under study but might lead to very bad prediction capability under another set of circumstances.

I would tend to think that the simplest model possible in terms of mathematical complexity should also be the most powerful in terms of prediction.

The factors identified in a part of the space might not have the same weight in another space of data.

By Bernard on Apr 15, 2008